Evaluation

Evaluation on the fine-tuned model

Evaluation Details

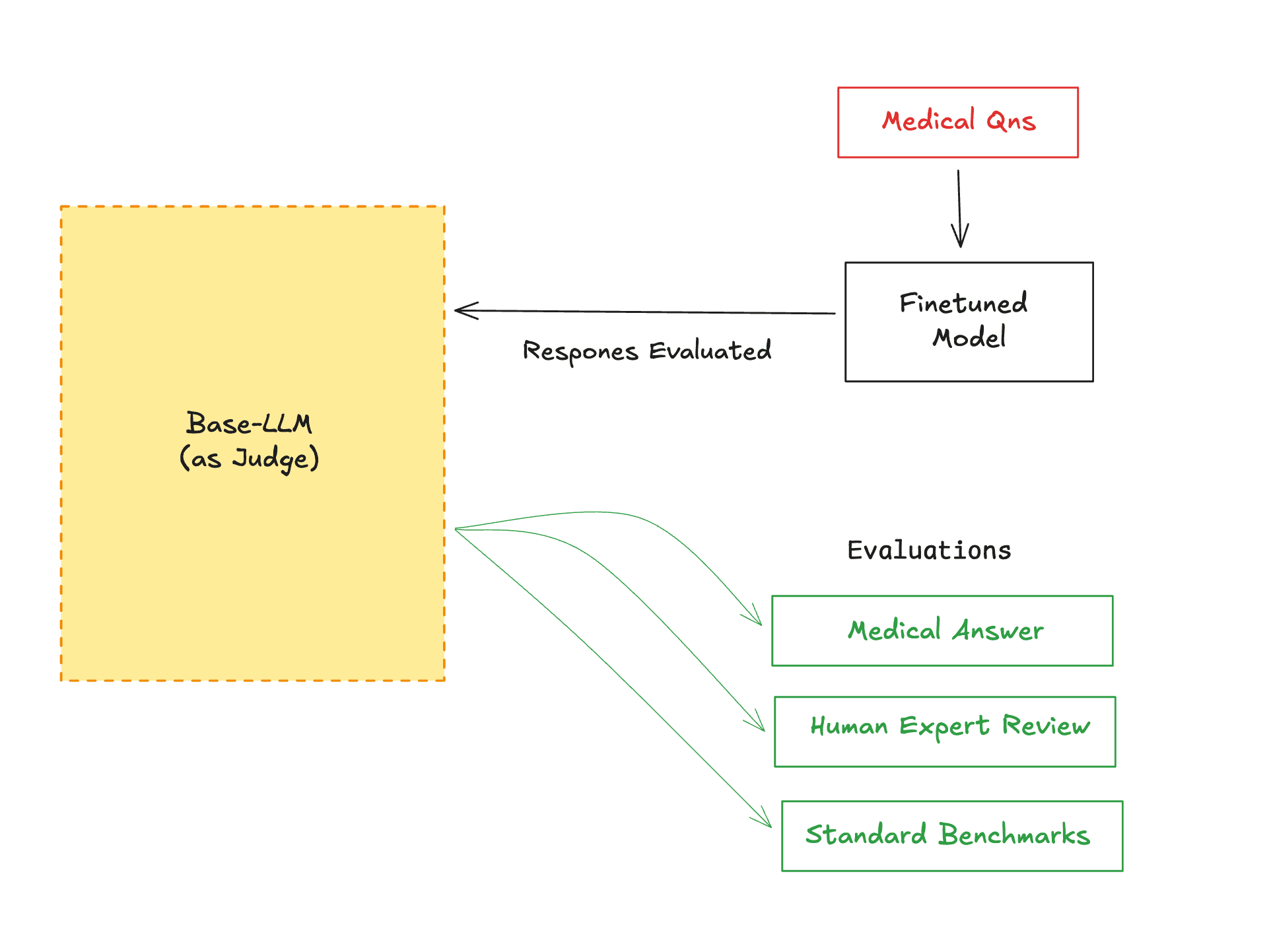

To evaluate the effectiveness of the fine-tuned model, I used a base large language model to assess whether the model was answering questions correctly after fine-tuning. This approach helps determine if the model has improved its understanding and generation of medical terminology and concepts.

There are several other techniques commonly used to evaluate machine learning and language models, including:

- Manual Review: Human experts assess the quality and accuracy of the model's responses.

- Automated Metrics: Use of metrics such as accuracy, F1 score, BLEU, ROUGE, or perplexity to quantitatively measure performance.

- Benchmark Datasets: Testing the model on established datasets with known answers to compare performance.

- User Studies: Gathering feedback from end-users to evaluate real-world usefulness and relevance.

- A/B Testing: Comparing the fine-tuned model against the base model or other versions in live environments.

Combining these techniques provides a comprehensive view of the model's strengths and areas for improvement after fine-tuning.

Performance Metrics

While initial evaluation shows progress, there is a lot to be done to further improve the model's performance and achieve optimal results in medical domain tasks.